What is Dynamic Rendering? Guide to Setting Up Dynamic Rendering for CSR Pages with Nginx

The Problem

In recent years, the explosion of UX/UI Frameworks like Angular or React has revolutionized web development, focusing on user interactions and completely browser-based UI processing.

These types of websites are called Client Side Rendering (CSR) and are commonly implemented as Single Page Applications (SPA). They leverage the processing power of user devices to reduce the load on servers. Moreover, these websites provide a smooth user experience by only requiring the server to send JavaScript code to the device, and fetching necessary data through APIs instead of loading the entire HTML code.

While CSR excels in certain aspects, it is not SEO-friendly. For those unfamiliar with SEO, it is the practice of making a website discoverable on the internet through search engines like Google or Bing. These search engines continuously send "crawlers" to visit all known websites and read their content. While these "crawlers" can read HTML well, they struggle with JavaScript. Therefore, making CSR websites SEO-friendly is crucial.

To achieve this, there are several methods available, such as using Server Side Rendering (SSR) libraries or completely building websites as static HTML - Server Side Generation (SSG). Each method has its pros and cons, and the choice depends on the specific use case. However, in this article, we will not delve into these two methods.

Instead, Google has mentioned a solution for SEO-friendly SPA websites called Dynamic Rendering. What is it exactly?

What is Dynamic Rendering?

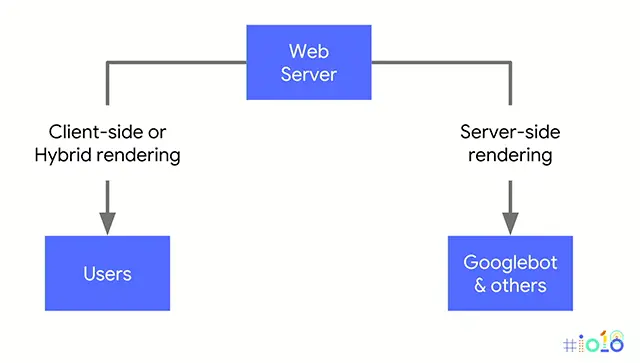

Dynamic Rendering is a term that refers to delivering query results by distinguishing between regular users and "crawlers" from search engines. If it's a regular user, JavaScript code is returned, but if it's a "crawler", an HTML page is returned.

Because "crawlers" are primarily interested in the content of your website, returning HTML results is essential for them to gather information. On the other hand, regular users not only care about the content but also the UI/UX, as they actively interact with the elements displayed on the website.

To achieve this, we rely on the User-Agent string of the user's web browser. Since User-Agent strings can differentiate between users and search engine "crawlers", we can return the desired results based on this information. The process of distinguishing between users and "crawlers" can be done at the HTTP server level using tools like Nginx, or if you are using frameworks like Nuxt or Next, they provide configurations that make this setup easy.

Currently, there are various ways to implement Dynamic Rendering. We can seek third-party services like Prerender.io or build our own HTML-rendering service using Google Chrome's Rendertron library.

Self-deploying Rendering Support Services

Rendertron is a headless Chrome, designed to render and regenerate web pages quickly.

It may sound interesting to have a browser without a user interface. After all, how can we browse the web without one? The reason is that it is not designed for regular web browsing. Removing display components allows the browser to be lightweight and more flexible in a server environment.

Rendertron provides various methods to create a rendering server. Let's assume we are running a Rendertron server using the Express.js module of Node:

const express = require('express');

const rendertron = require('rendertron-middleware');

const app = express();

app.use(

rendertron.makeMiddleware({

proxyUrl: 'http://my-rendertron-instance/render',

})

);

app.use(express.static('files'));

app.listen(8080);

Replace http://my-rendertron-instance with the server address you set up. You can find detailed configuration instructions here.

Afterward, try accessing the address http://my-rendertron-instance/render/your-spa-url with your-spa-url being the address of your CSR page. You should see the rendered HTML code of the web page returned.

Configuring Dynamic Rendering for CSR Pages with Nginx

If you are deploying a CSR website through Nginx, here is a guide for you.

First, we need to determine a list of "crawler" User-Agent strings:

map $http_user_agent $is_bot {

default 0;

"~*Prerender" 0;

"~*googlebot" 1;

"~*yahoo!\ slurp" 1;

"~*bingbot" 1;

"~*yandex" 1;

"~*baiduspider" 1;

"~*facebookexternalhit" 1;

"~*twitterbot" 1;

"~*rogerbot" 1;

"~*linkedinbot" 1;

"~*embedly" 1;

"~*quora\ link\ preview" 1;

"~*showyoubot" 1;

"~*outbrain" 1;

"~*pinterest\/0\." 1;

"~*developers.google.com\/\+\/web\/snippet" 1;

"~*slackbot" 1;

"~*vkshare" 1;

"~*w3c_validator" 1;

"~*redditbot" 1;

"~*applebot" 1;

"~*whatsapp" 1;

"~*flipboard" 1;

"~*tumblr" 1;

"~*bitlybot" 1;

"~*skypeuripreview" 1;

"~*nuzzel" 1;

"~*discordbot" 1;

"~*google\ page\ speed" 1;

"~*qwantify" 1;

"~*pinterestbot" 1;

"~*bitrix\ link\ preview" 1;

"~*xing-contenttabreceiver" 1;

"~*chrome-lighthouse" 1;

"~*telegrambot" 1;

}

Next, configure Nginx to redirect these User-Agent strings to the Rendertron server:

server {

listen 80;

server_name example.com;

...

if ($is_bot = 1) {

rewrite ^(.*)$ /rendertron/$1;

}

location /rendertron/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_pass http://my-rendertron-instance/render/$scheme://$host:$server_port$request_uri;

}

}

Don't forget to restart Nginx and test your website using a browser or tools like Postman with a "crawler" User-Agent string to see if your website returns the HTML code.

Here is an example User-Agent string from Google:

Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

Conclusion

Single Page Apps are currently a popular web development trend due to the advantages they offer. However, SPAs are not SEO-friendly. Therefore, Dynamic Rendering is one of the solutions to bring SPAs closer to search engines.

The secret stack of Blog

As a developer, are you curious about the technology secrets or the technical debts of this blog? All secrets will be revealed in the article below. What are you waiting for, click now!

Subscribe to receive new article notifications

Comments (1)

Cách này tôi thấy tốc độ chậm đi đáng kể, nhưng ko sao bởi vì chỉ dành cho bot thôi mà :D

Cách này tôi thấy tốc độ chậm đi đáng kể, nhưng ko sao bởi vì chỉ dành cho bot thôi mà :D

Đúng rồi bạn nhưng nếu chậm quá thì cũng không tốt đâu vì mình được biết thuật toán của GG có tính cả điểm page speed nữa nên cần lưu ý