Logging Node.js Applications in 3 Levels

Problem

As developers, we know that every line of code added increases the risk of introducing bugs. It can take months to develop a new feature and have it working perfectly in the development environment, only to encounter issues when deploying to the production environment!

In such situations, instead of scratching our heads trying to figure out what went wrong, we should quickly check the system logs. Logs can be from the server, DevOps tools, or even lines of code we add ourselves.

This highlights the importance of logging. Without logs, we would have no idea what is happening in case of any issues. But not just any logs, logs should be systematic, organized, and provide some useful value.

In this article, I will outline 3 levels of log recording. Keep in mind that this is just my personal perspective and you can use it as a reference!

Basic Logging

console.log everywhere and anytime you need it. Want to see the log of an API request? console.log. Want to see the body of a request? console.log. Want to see the value of variable y at line x? console.log...

However, console.log is not performance-friendly and clutters the console. The information gets lost after a while, there is no centralized management, and this reduces the effectiveness of console.log.

If you still prefer to use console.log, try wrapping it in a function like println that takes the value to be logged and can easily be enabled/disabled using an environmental variable:

function println(data) {

if (process.env.isEnabledLog == true) {

console.log(data);

}

}

Then, you can simply call the println function to log. It also allows for centralized content presentation and enables easy toggling of logging on/off.

Additionally, we can use libraries to make logging more readable, such as coloring logs using colors, and formatting logs based on their meanings (info, debug, error...).

Purposeful Logging

Logging is not just about printing to the console. We need to determine what needs to be logged and how we can trace it back at any time. At the API level, we log incoming requests to the server or any runtime errors that occur during application execution. These logs can be written to a *.log file or stored in a database, or any other form of storage.

The format of the logs can vary, including plain text lines, JSON format, or structured data in a database. However, all logs must include a timestamp and error messages, as well as the location where the error occurs. For example, here is a log entry recording an incoming API request:

[2022-07-06T04:00:06Z] 200 - 13ms GET - /api/v1/article/pure-function-trong-javascript-tai-sao-chung-ta-nen-biet-cang-som-cang-tot-15 1.54.251.103 - Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36 INFO

In Node.js, a powerful log library called winston can be used. This library provides many powerful features such as logging levels, formatting, transports, query, stream, etc.

const logger = winston.createLogger({

transports: [

new winston.transports.Console(),

new winston.transports.File({ filename: 'combined.log' })

]

});

logger.info('Hello again distributed logs');

Now, all logs will be written to the combined.log file, ensuring that logs are not lost and can be easily reviewed.

Advanced Logging

This is still purposeful logging, except now we use dedicated log management tools or cloud-based services.

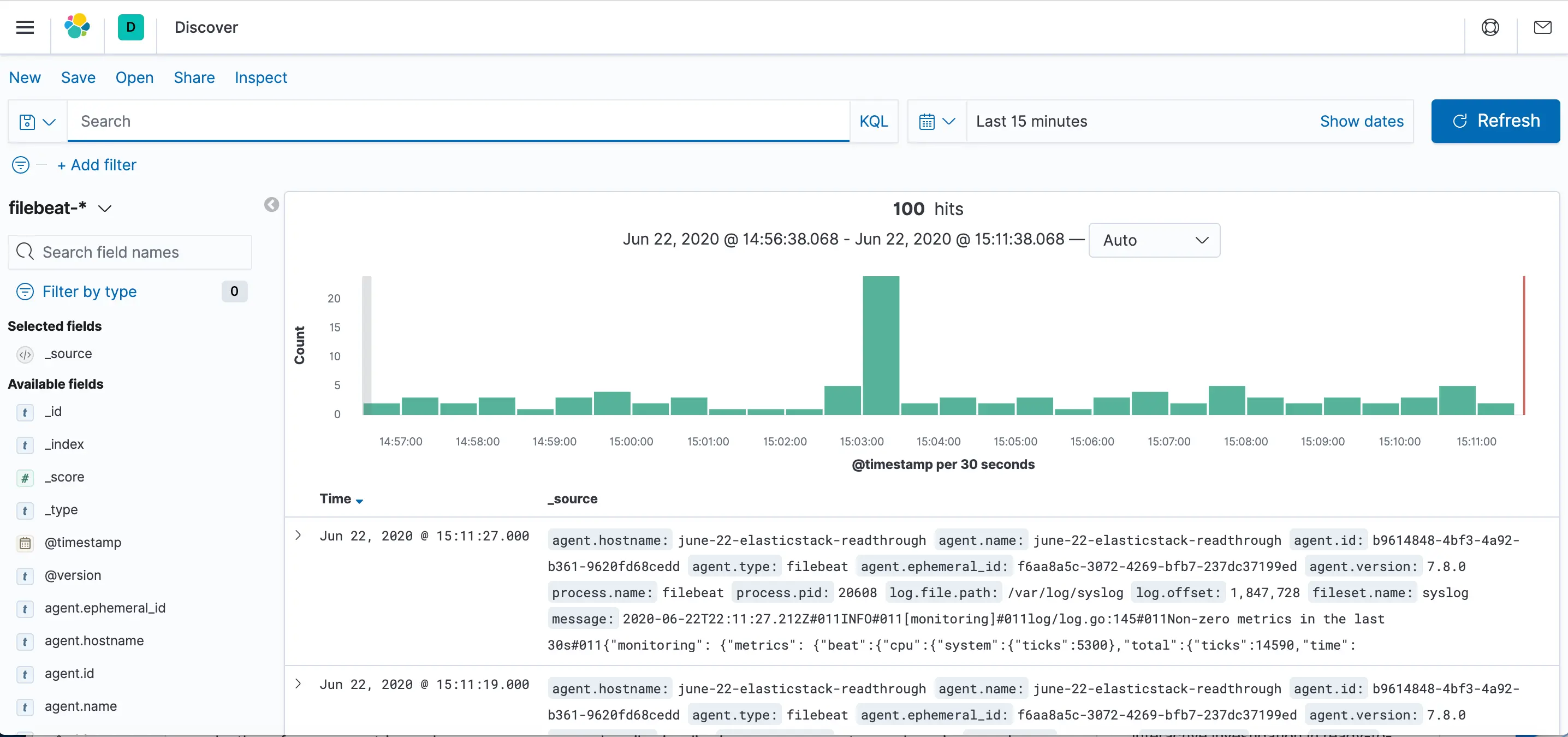

ELK is a highly professional log management and visualization stack. By using ELK, we can aggregate, monitor, search, and analyze log data quickly and visually through customizable charts. However, becoming proficient in using ELK requires some time to get started.

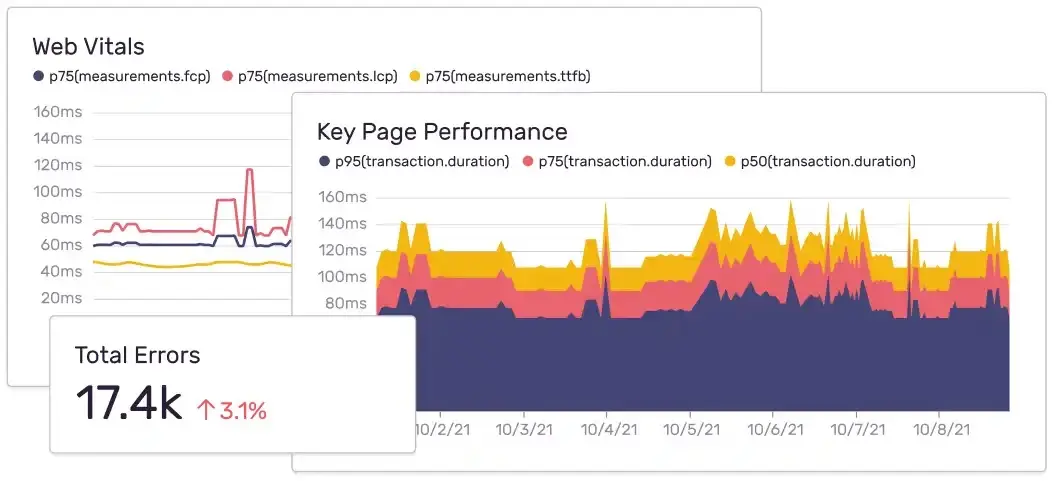

Apart from ELK, there are many service providers that offer log storage, search, and analysis capabilities. Sentry is one example. With Sentry, we can aggregate logs from various sources, perform statistics, and log analysis. The downside of these services is that they often require a fee for large usage, and the free plan usually has limitations on functionality and storage capacity. However, if you are working on small to medium-sized projects, give them a try to experience the benefits they provide. Who knows, you might invest in them in the future!

Summary

In this article, I discussed the 3 basic levels of logging. Regardless of the level, the purpose of logging is to track and easily debug issues. At a more advanced level, logging includes statistics and visualization, allowing us to predict or address issues in the future.

5 profound lessons

Every product comes with stories. The success of others is an inspiration for many to follow. 5 lessons learned have changed me forever. How about you? Click now!

Subscribe to receive new article notifications

Comments (1)

Có một vấn đề đó là ghi log ra file đến một lúc nào đó file quá lớn thì phải làm ntn?

Có một vấn đề đó là ghi log ra file đến một lúc nào đó file quá lớn thì phải làm ntn?

Thực ra có nhiều cách để bạn giải quyết nhưng theo mình thì bạn có thể chia các file log ra theo này, tháng, năm để dễ theo dõi chẳng hạn