Free Use of Large Language Models (LLM) Compatible with ChatGPT API

The Issue

Large language models (LLM) are very large deep learning models pre-trained on a massive amount of data. LLMs are incredibly versatile. One model can perform entirely different tasks, such as answering questions, summarizing documents, translating languages, and completing sentences. One of the very popular LLMs is from ChatGPT.

Thanks to the ChatGPT API, it can be integrated into applications, creating many intelligent and groundbreaking features. For example, it can serve as a text translation tool, a chatbot, or create logical processing tasks using natural language.

However, API calls to ChatGPT incur costs. You must pay a fee corresponding to the amount of input and output characters. If too many characters are entered or if ChatGPT responds with too many words, we have to pay progressively more.

Previously, I had an article discussing how to use GPT-4 for free through GitHub Copilot, while warning readers that this is a trick that could potentially affect their Copilot accounts. I used this method for a long time until GitHub sent an email reminder, forcing me to stop using it.

After that, I looked for another solution to replace the ChatGPT API but failed, as most free LLM services only allow interaction through a web interface. There are also some open-source libraries on GitHub claiming to allow free use of the GPT API, but they seem to be unstable.

In reality, many tech giants have "open-sourced" their large language models. Notably, Gemma from Google, Llama from Facebook, and the well-known Mistral model. There are many articles from various parties providing research and performance comparisons between these models, and they all yield relatively good results in certain aspects (good because they do not match many models that require payment, like GPT-4). Readers can refer to Comparison of Models: Quality, Performance & Price Analysis.

Another disadvantage is that each model has a different installation and usage method. Most models provide or deploy very crude APIs. Imagine that if you start it up, you can only interact with it via the command line. If you're lucky, you might find libraries written in more familiar programming languages like Python, Go, JavaScript...

Thus, language models seem to be fragmented and force us to redeploy APIs if we want to interact with them more conveniently.

Just when I thought there was no further progress, I coincidentally came across a tool called Ollama. Ollama helps install and use open-source language models for free with just one command line. Ollama currently supports MacOS, Windows, and Linux.

To install Ollama, use the command:

$ curl -fsSL https://ollama.com/install.sh | sh

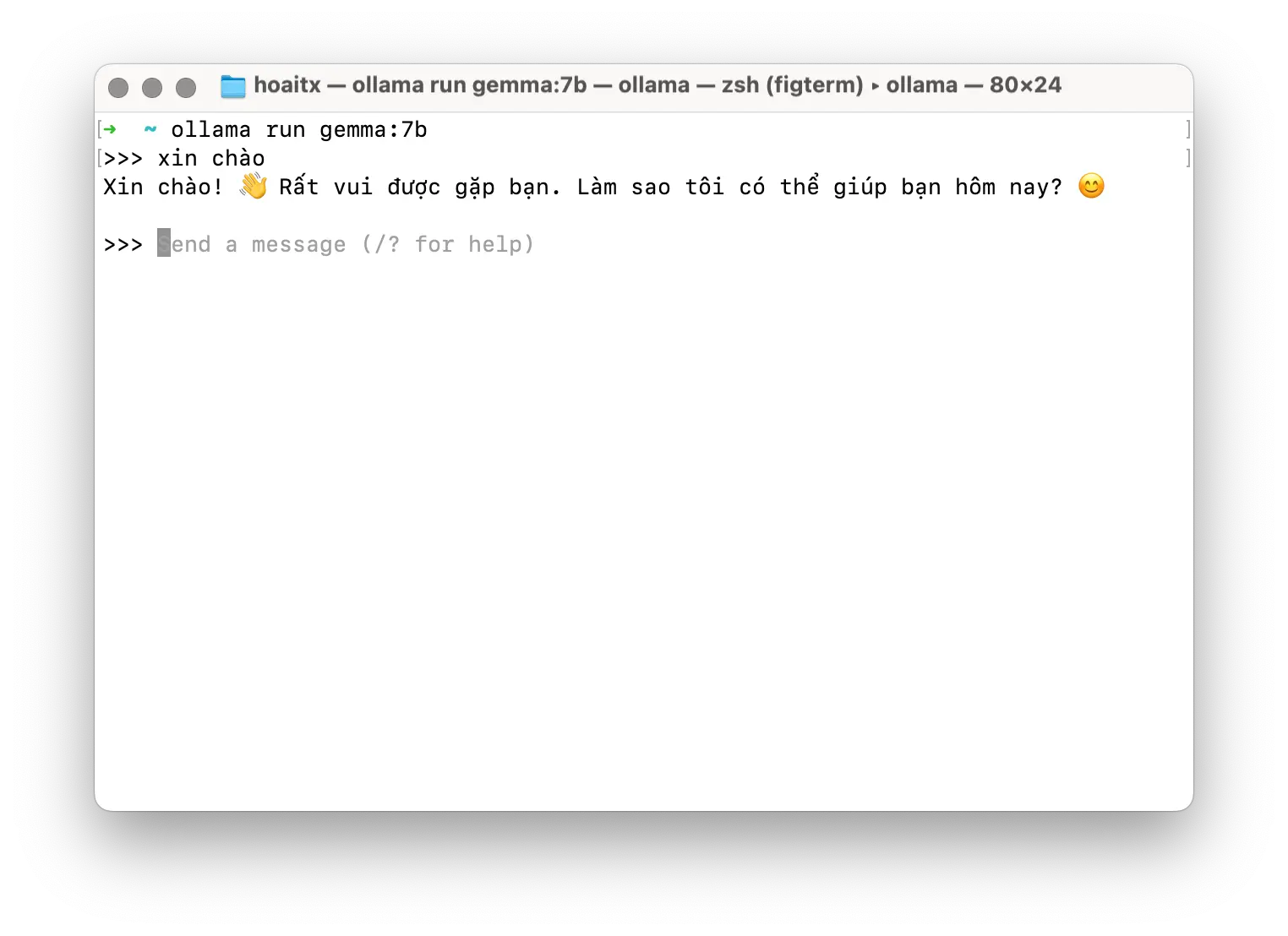

Wait a moment for the installation process to complete. Now you want to use Google's Gemma model, right? Just type another command:

$ ollama run gemma:7b

Gemma has two models with 2 billion parameters (2B Parameters) and 7 billion parameters (7B Parameters). The above command installs the 7B model. To see the full list of models supported by Ollama, readers can refer to the Ollama library. Note that the larger the model, the more processing power and RAM it requires.

After downloading the model, you will see the command prompt for text input; try typing anything.

That's Gemma responding. Now you can use this model right on your computer.

You can exit by using the command /bye. Ollama will still run in the background. To see which language models are running, use the command ollama ps. To see all downloaded models, use the command ollama ls. To run a language model, use the command ollama run <model_name>.

Not only that, you can also call the Rest API to interact with the language models easily.

For example, to create a conversation with roles similar to ChatGPT.

$ curl http://localhost:11434/api/chat -d '{

"model": "llama3",

"messages": [

{ "role": "user", "content": "why is the sky blue?" }

]

}'

Interestingly, Ollama has a layer that is compatible with the ChatGPT API. Although it is not fully compatible, the most basic feature, Chat Completions, has been supported. Take a look at this API call; does it seem familiar?

curl http://localhost:11434/v1/chat/completions\

-H "Content-Type: application/json"\

-d '{

"model": "gemma:7b",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'

http://localhost:11434 is the local address that Ollama creates to interact with the API.

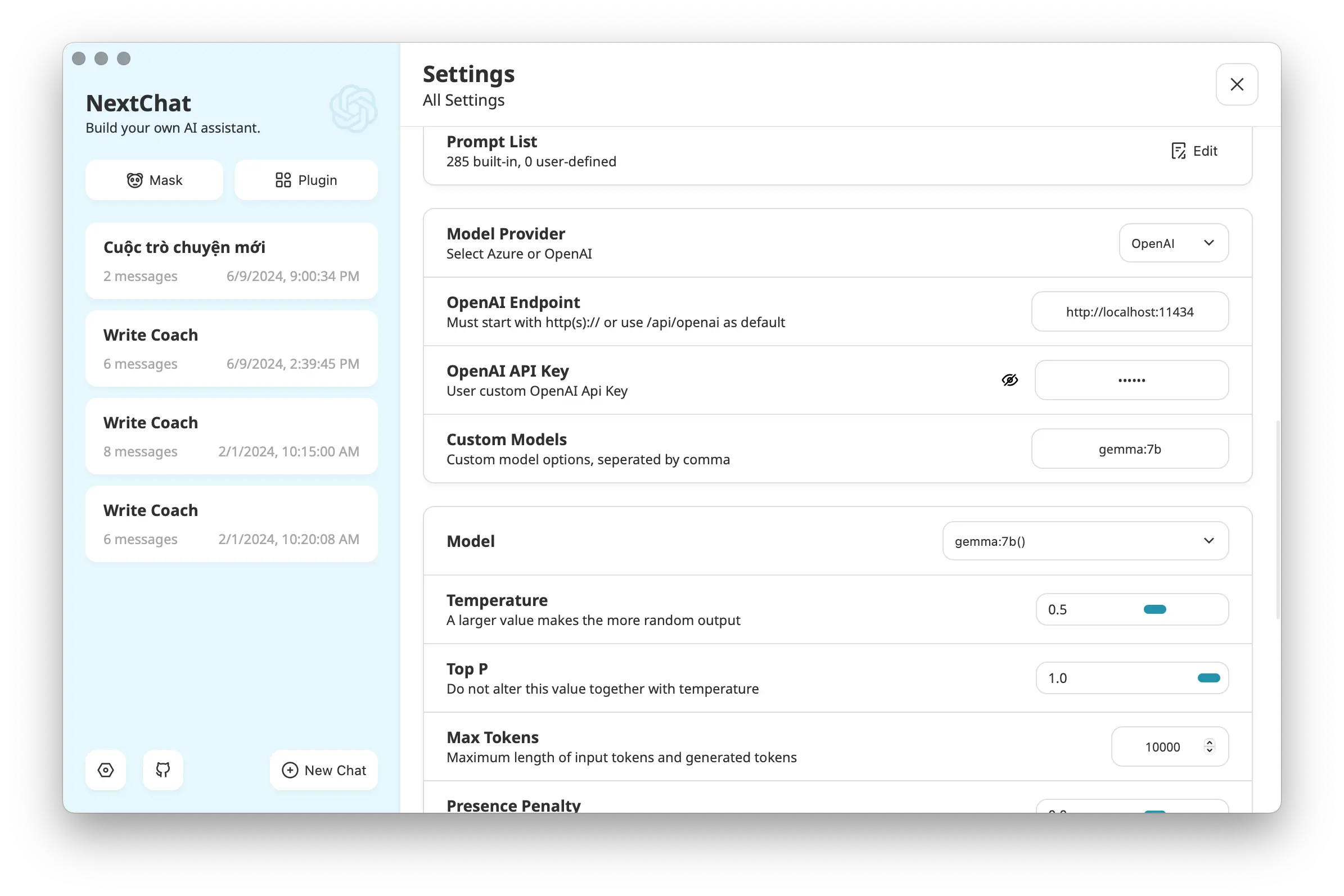

Do you remember NextChat? Let's integrate this new API into NextChat to manage conversations.

In the "OpenAI Endpoint" box, enter http://localhost:11434, the OpenAI API Key is ollama, and for Custom Models, enter the name of the currently running language model, for example, gemma:7b if you are using Google's Gemma 7B. The model should be selected according to the Custom Models chosen above.

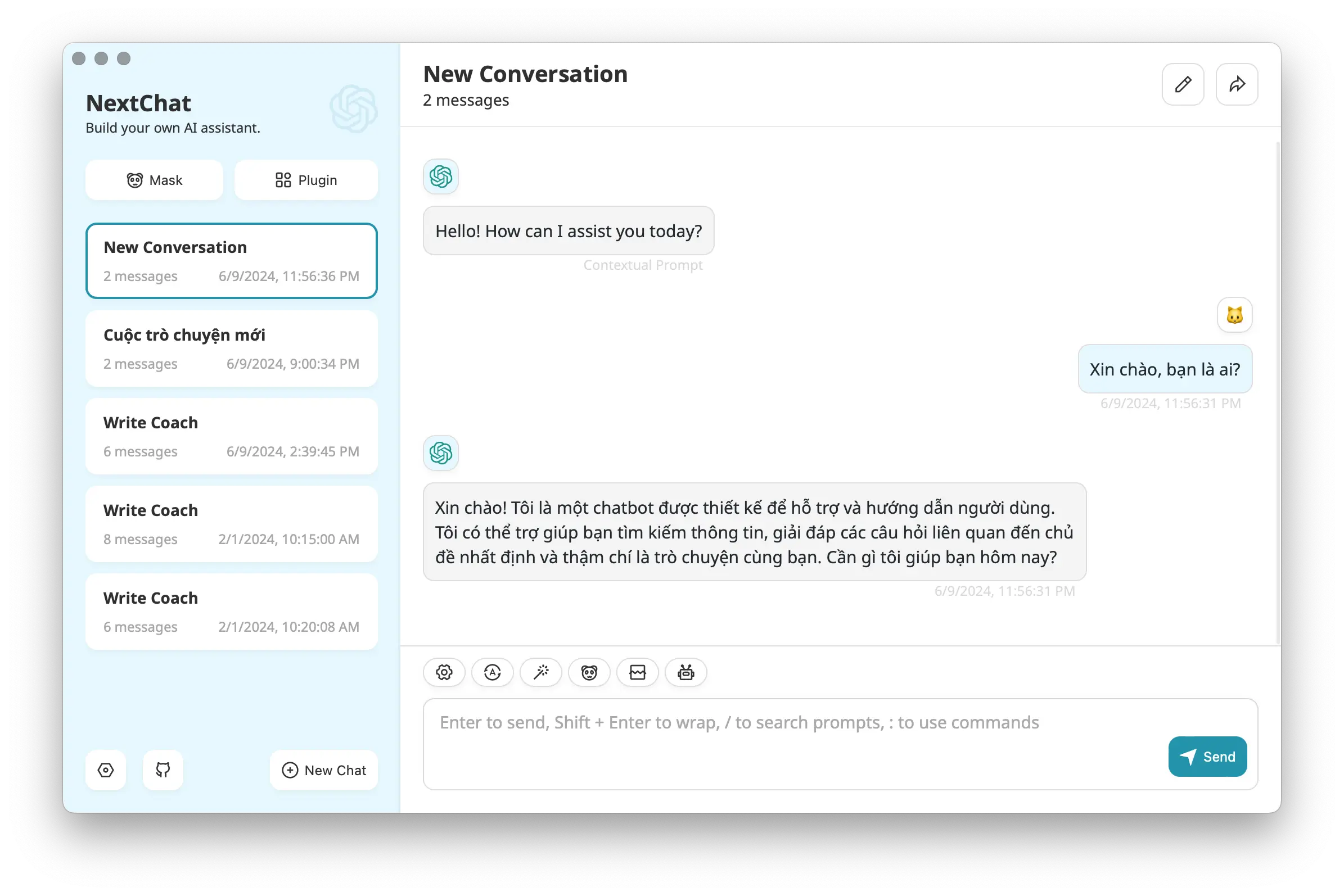

Let's try the new API right now.

Conclusion

Ollama is a tool that makes it easy for us to install and use large language models. Ollama supports many popular open-source models for free, and the great thing is that it provides an API compatible with ChatGPT, thus becoming a wonderful tool to replace ChatGPT for simple everyday tasks.

The secret stack of Blog

As a developer, are you curious about the technology secrets or the technical debts of this blog? All secrets will be revealed in the article below. What are you waiting for, click now!

Subscribe to receive new article notifications

Comments (1)

Bác cho em hỏi xíu về con này với: em có tải ollama.exe bản windowns trên trang chủ của nó do không dùng được curl thì khi host api vào Nextchat như cách bác nói thì bị lỗi "Failed to fetch", không biết có cách nào sửa không ạ.

Bác cho em hỏi xíu về con này với: em có tải ollama.exe bản windowns trên trang chủ của nó do không dùng được curl thì khi host api vào Nextchat như cách bác nói thì bị lỗi "Failed to fetch", không biết có cách nào sửa không ạ.

E trả lời bác bên fb rồi đúng ko 😅